PiVR has been developed by David Tadres and Matthieu Louis (Louis Lab).

5. PiVR Software Manual

Warning

If you have the High LED power version of PiVR you must take care to properly shield yourself and others from the potentially very strong LED light to protect eyes and skin!

Important

Several options will open a pop-up. You must close the pop-up in order to interact with the main window.

Important

The software has different functionality if run on a Raspberry Pi as compared to any other PC. This software manual is for the Raspberry Pi version of the software

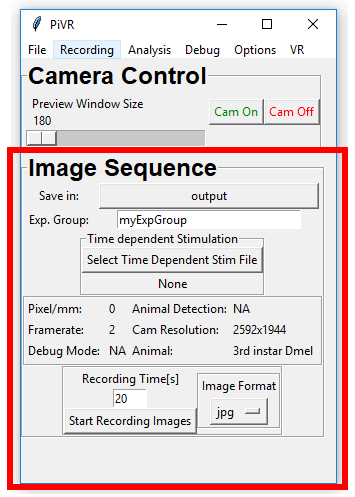

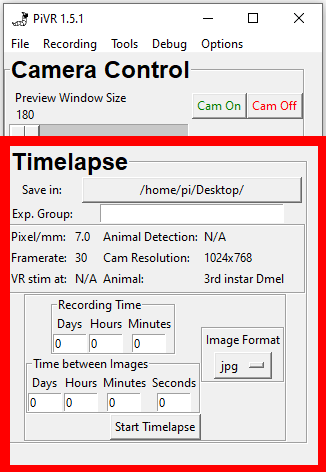

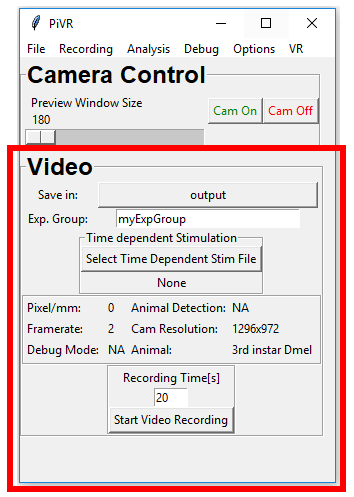

5.3. Preparing a Time Dependent Stimulus File

In your PiVR folder you can find a folder called ‘time_dependent_stim’. On a fresh install it is supposed to contain a single file: blueprint_stim_file.csv.

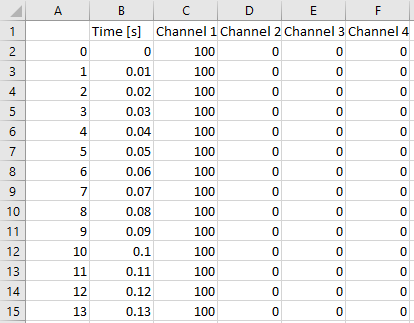

When you open it with, e.g. excel or your csv viewing program of choice, you’ll see that there are 6 columns and many rows:

The first column (A) is just an index and not really important. The second column (B) indicates the time at which the stimulus defined in the columns labelled ‘Channel 1’, ‘Channel 2’, ‘Channel 3’ and ‘Channel 4’ is being presented. See here what a ‘Channel’ is.

0 means the light is completely OFF. 100 means the light is completely ON. A number in between, e.g. 50, means that the light is on at 50/100=50%.

You may use the provided file as a blueprint to create your own stimulus by adding the stimulus intensity at the desired timepoint. Note that the stimulus must be between 0 and 100.

Alternatively, you can create another file from scratch. It is important that the file is a csv file with the identical column names as provided in the file above.

You can change the time resolution if you wish.

Important

What is a good time resolution to program into the time dependent stimulus file? It depends:

Internally, PiVR keeps track of time using timestamps from the camera. It then calls numpy.searchsorted on the provided ‘Time [s]’ column.

The algorithm is fast but at a low time resolution can lead to unexpected results as it will always stop whenever it finds a value larger than the one it looks for.

For example, if you provide one timepoint for each second while recording at 10 frames per second for the first frame at t=0 it will present the stimulus for t=0. At t=0.1s it will already provide stimulus defined at 1second.

A good compromise between precision and file size for e.g. 10 frames per second is a resolution of 0.01 seconds (10ms). If you want to use higher frame rates AND you need very precise stimuli you should increase the resolution to 0.001 seconds (1ms). Anything above is not useful considering that PiVR can’t run at frequencies above 90 Hz (about 10ms per frame).

Note

Before v1.7.0, Time Dependent Stimulus File was defined based on frame. The above was implemented to give better control over when exactly a stimulus is presented. The previous method could introduce incoherence between experiments and it is therefore strongly recommended to use the method described above.

If you must use the frame based Time Dependent Stimulus File you may find more information here.

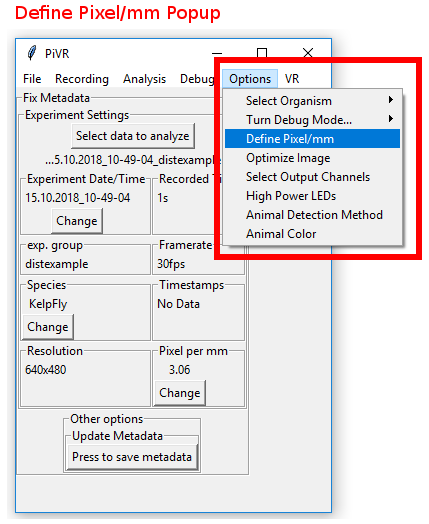

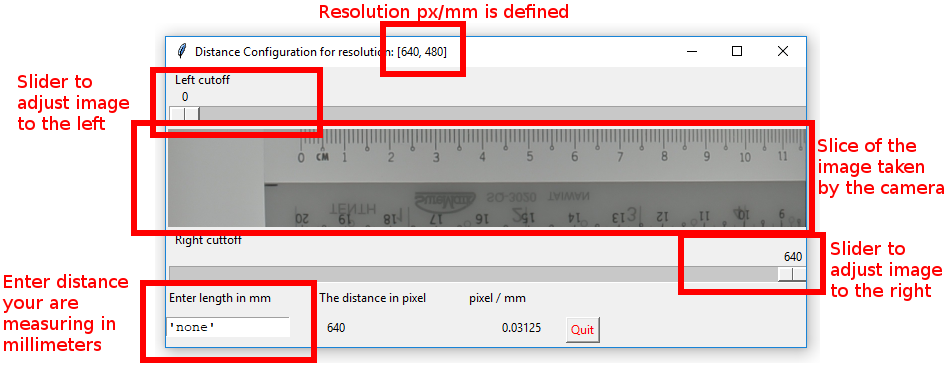

5.4. Set Pixel/mm

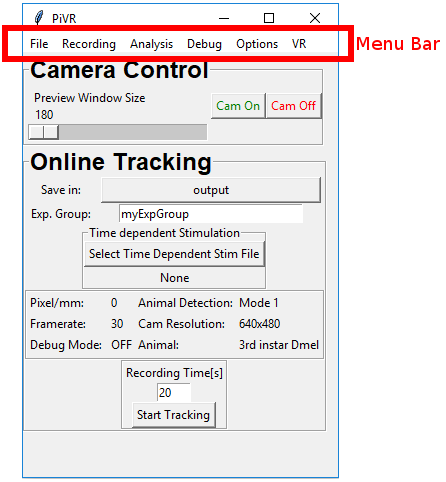

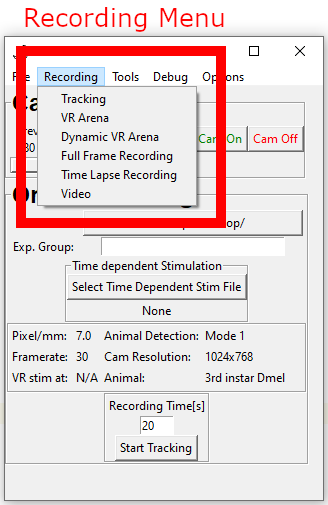

In order to set Pixel/mm for your resolution, press the ‘Options’ Menu in the Menu Bar. Then select ‘Define Pixel/mm’.

In the popup window you will see features:

The resolution you are currently using. The defined value will only be valid for this resolution

The left and right cutoff slider. By moving them you can measure the distance.

A slice of the image taken by the camera. You want to put something you can measure horizontally before the camera.

A text field to enter a length you want to measure.

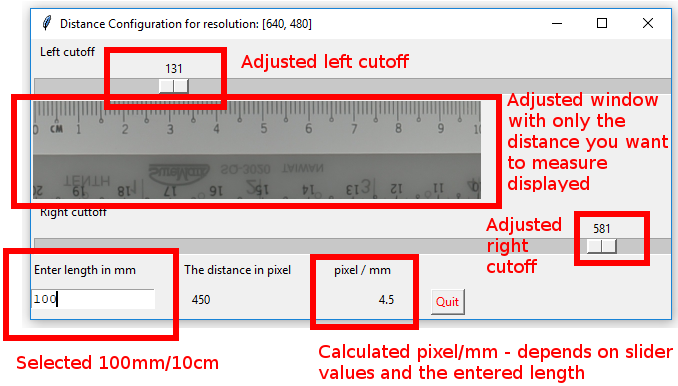

Below is an example of an adjusted distance configuration window. Once you are satisfied with the adjustments you’ve made, hit the quit button.

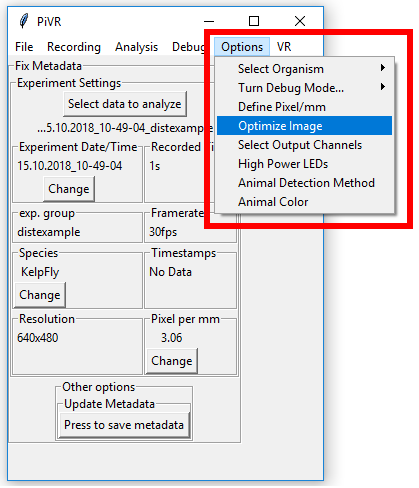

5.5. Adjust image

In order to set any options related to the image, press the ‘Options’ Menu in the Menu Bar. Then select ‘Optimize Image’.

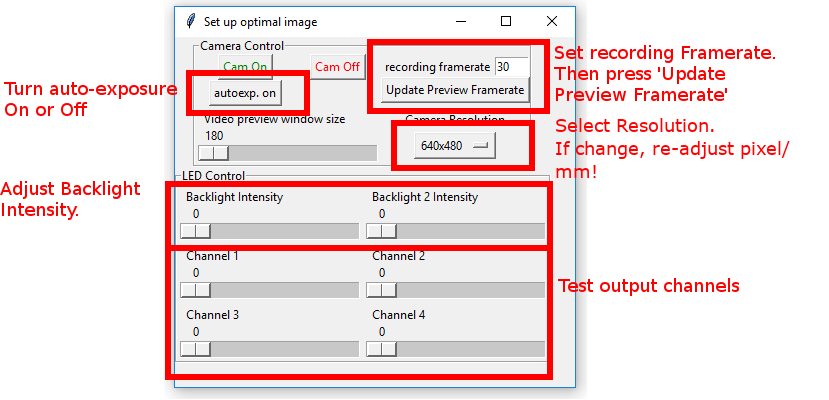

This popup should being used to set up the image in the optimal way:

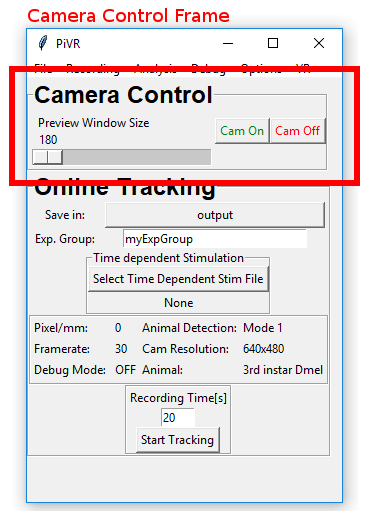

Turn the camera on (‘Cam On’) if it’s not on already.

Adjust the preview size so that you can comfortably see both the preview and the popup.

Set the frame rate as desired.

Press the ‘Update Preview Framerate’ button.

Set the resolution you’d like to use for the recording.

Important

For Online Tracking and Closed Loop Experiments only 640x480, 1024x764 and 1296x962 have been tested.

Make sure the autoexposure button says ‘autoexp on’.

Turn the Backlight Intensity up. It is normal to only see something above 150’000. 400’000-500’000 is often a good value to choose.

If you have Backlight 2 intensity on one of the GPIOs (see define GPIO output channels) you can also adjust Backlight 2 intensity at this point.

To test your output channels, slide the appropriate slider to the right. At the beginning of any experiments, these will be turned off again. To keep a stimulus ON for the duration of the experiment use the Backlight 2 intensity.

5.5.1. Set up optimal image

In order to set up optimal image parameters I usually do the following:

Turn ‘Cam On’.

Set ‘autoexp on’.

Pull ‘Backlight Intensity’ slider all the way to the left (Image will be dark).

Now pull the ‘Backlight Intensity’ slider to the right. As soon as I see an image in the camera I go another 100’000 to the right - this way I’m not at the lower detection limit of the camera.

Then I turn ‘autoexp off’.

Often it can improve the image if I pull the ‘Backlight Intensity’ slider a bit more to the right, effectively overexposing the image a bit.

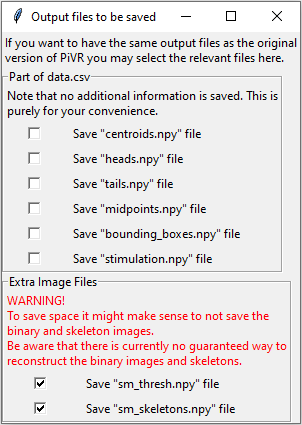

5.6. Define Output Files

Initial versions of PiVR saved data such as centroid position not only in the data.csv file but also in separate npy files.

With version 1.6.9 the goal was to reduce clutter in the experimental folder. All redundant files are now not saved by default.

To keep backward compatibility, this option allows users to save files explicitly as in the earlier versions of PiVR.

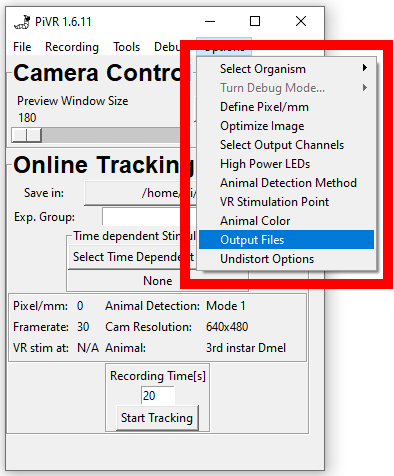

To find the menu, press the ‘Options’ menu in the Menu Bar. Then select ‘Output Files’.

The popup will allow you to select any of the previously saved numpy files:

Centroids.npy

Heads.npy

Tails.npy

Midpoints.npy

Bounding_boxes.npy

Stimulation.npy

In addition, you have the option to save significant amounts of space by not saving the binary images and the skeletons.

These are still being saved by default as there is currently no way to create identical files. See here for discussion and examples where it fails.

If you know you won’t need the binary images and/or the skeletons you have the option to select that here.

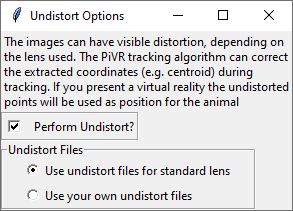

5.7. Undistort Options

In v1.7.0, the undistort feature was added. See here (Gitlab) or here (PiVR.org) to see a detailed explanation of what the problem is and how PiVR is solving it.

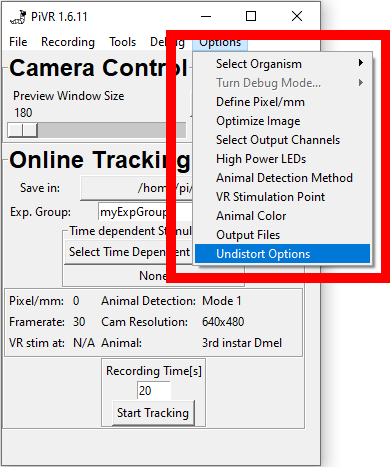

To find the menu, press the ‘Options’ menu in the Menu Bar. Then select ‘Undistort Options’.

Note

This option cannot be turned on if opencv is not installed. If the menu is greyed out make sure to install opencv. In addition, you will have ‘noCV2’ written next to the version number of PiVR.

If you are on the Raspberry Pi the easiest way to install opencv is to wipe the SD card, reinstall the OS and make a clean install of the PiVR software using the installation file.

On a PC, just install it using conda by first (1) activating the PiVR environment and (2) entering conda install -c conda-forge opencv

In this menu you can choose to perform undistort during tracking or not.

If you are not using the standard lens that comes with the camera in the BOM you need to use your own undistort files.

See here how to create your own files.

5.8. Define GPIO output channels

What is a ‘Channel’?

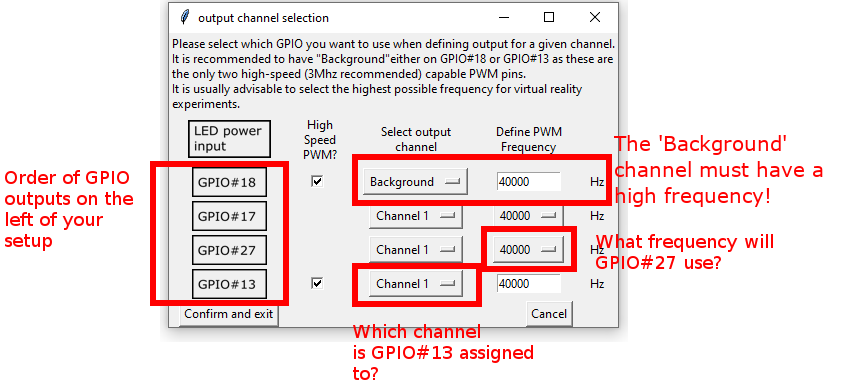

There are 4 GPIO’s that can be used to control LEDs: GPIO#18, GPIO#17, GPIO#27 and GPIO#13. (Side Note: GPIO#18 and GPIO#13 are special as they are the only ones that are capable of providing PWM frequencies above 40kHz.)

To give the user maximum flexibility, each of the GPIO’s can be assigned a ‘Channel’ which can be controlled independently in the software. This also allows the ‘bundling’ of GPIO’s into Channels.

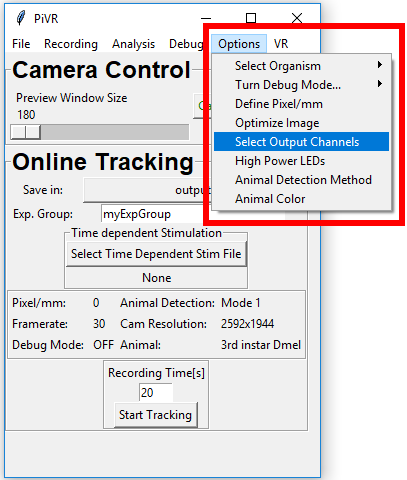

In order to define GPIO output channels for your resolution, press the ‘Options’ menu in the Menu Bar. Then select ‘define GPIO output channels’.

The images on the far left indicate which of the outputs on the left of your setups are which GPIO (e.g. the one closest to the LED power input is GPIO#18).

Channel 1 is always defined as the channel that is used for the Virtual Arena experiments.

Channel 1, Channel 2, Channel 3 and Channel 4 can be separately addressed using the time dependent stimulus files.

The standard frequency values are set for the normal PiVR setup running exclusively with LED strips:

GPIO # |

Output Channel |

PWM Frequency |

|---|---|---|

#18 |

Background |

40’000 Hz |

#17 |

Channel 1 |

40’000 Hz |

#27 |

Channel 1 |

40’000 Hz |

#13 |

Channel 1 |

40’000 Hz |

If you are building the The High Powered Version you have to modify the PWM frequency to match the values in the datasheet:

GPIO # |

Output Channel |

PWM Frequency |

|---|---|---|

#18 |

Background |

40’000 Hz |

#17 |

Channel 1 |

1’000 Hz |

#27 |

Channel 1 |

1’000 Hz |

#13 |

Channel 1 |

1’000 Hz |

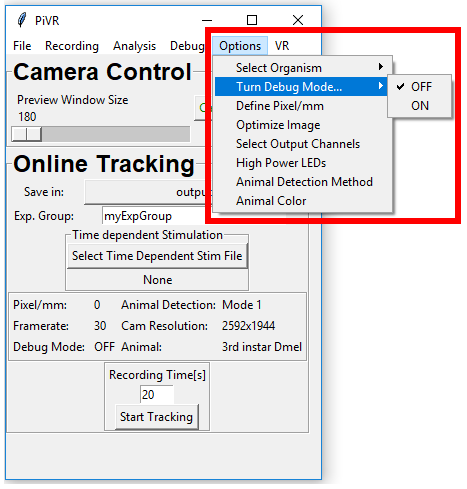

5.9. Turn Debug Mode ON/OFF

In order turn debug mode On or Off press ‘Options’ menu in the Menu Bar. Then go on ‘Turn Debug Mode…’ and select either ‘OFF’ or ‘ON’.

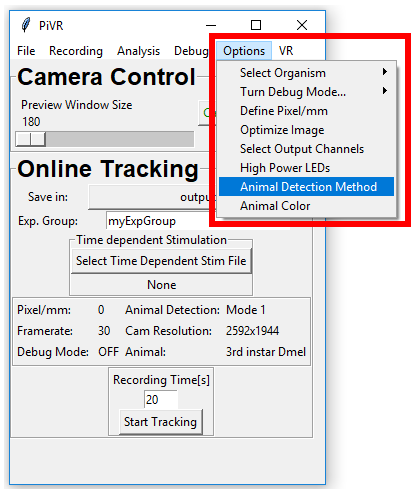

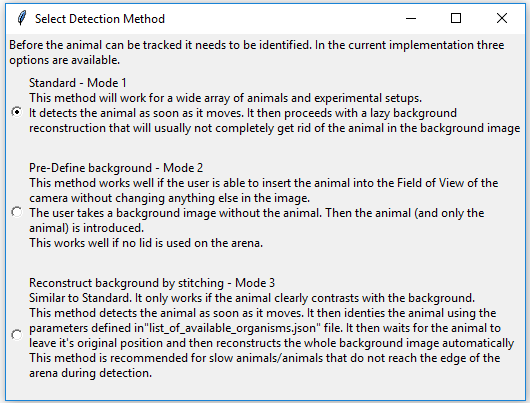

5.10. Select Animal Detection Mode

In order define the animal detection method press ‘Options’ menu in the Menu Bar. Then press ‘Animal Detection Method’.

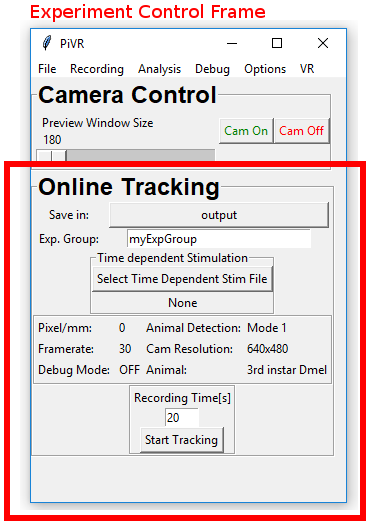

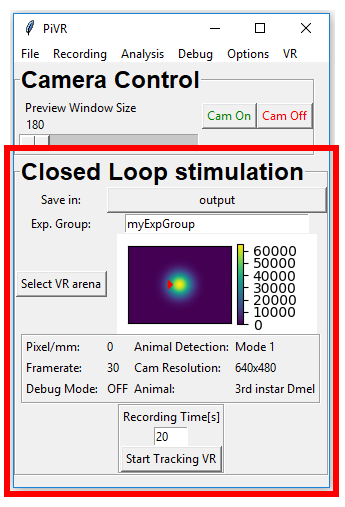

When in either ‘Online Tracking’ or ‘Closed Loop Stimulation’ the animal needs to be detected. There are 3 modes that can be used to detect the animal. For most cases Mode 1 (Standard) will be fine. If you need a clear background image consider Mode 2 or Mode 3.

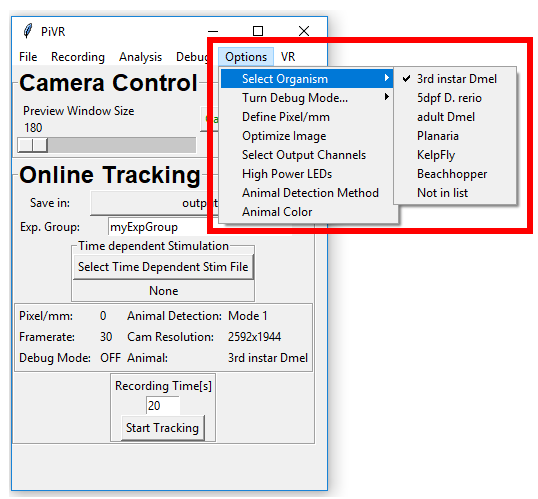

5.11. Select Organism

In order select an organism press ‘Options’ menu in the Menu Bar. Then go on ‘Select Animal’ and select your animal.

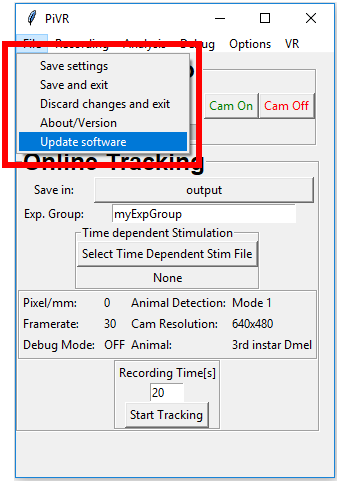

5.12. Updating the software

In order to update the software on your RaspberryPi, press the ‘File’ menu in the Menu Bar. Then go on ‘Update Software’.

Note

Please make sure you are connected to the Internet when updating.

Technicalities:

This will first update our Linux by calling:

sudo update

Next, it will download the newest version from the gitlab repository by calling:

git pull

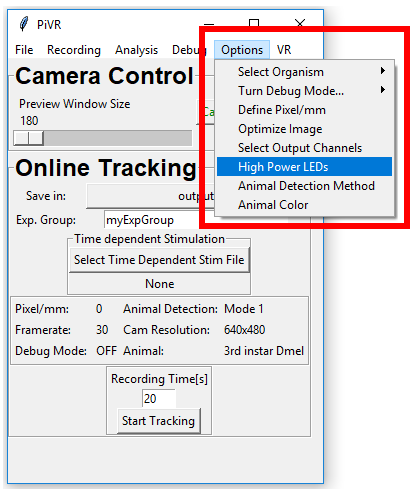

5.13. High/Low Power LED switch

In order to choose between high and low power LED setups press ‘Options’ menu in the Menu Bar. Then go on ‘High Power LEDs’.

Select either Standard or High power version depending on the setup you have.

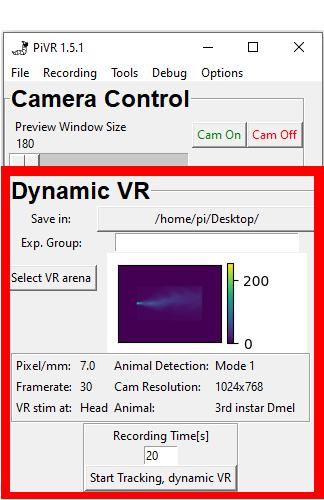

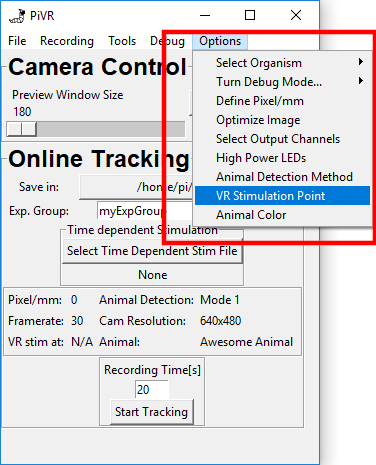

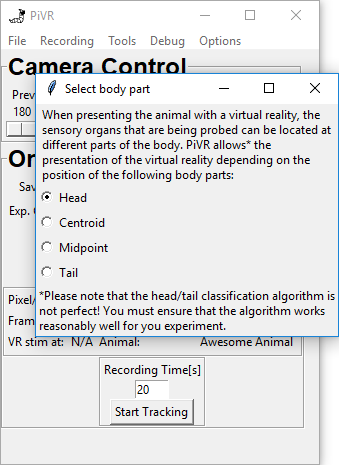

5.14. Select Body Part for VR stimulation

When running virtual reality experiments the cells you are interested in could be at different places of the animal.

PiVR allows you to present the virtual reality depending on different body parts identified during tracking.

You may choose different body parts that are defined during tracking.

Note

As the difference between centroid and midpoint is not straightforward, please see here for an explanation.

The Head (standard) will probably make a lot of sense in many experiments, as a lot of sensory neurons of many animals are located there. However, be aware that the Head/Tail classification algorithm is not perfect and does make mistakes. There is no option to correct for wrong head/tail assignment during the experiment!

The Centroid is probably the most consistently correct point during tracking. Please see here to see how it is defined.

The Midpoint is similar to the centroid, but can be different in flexible animals such as fruit fly larvae.

The tail is the final option to choose from. We have used the presentation of the virtual reality based on tail position as a control in the past.

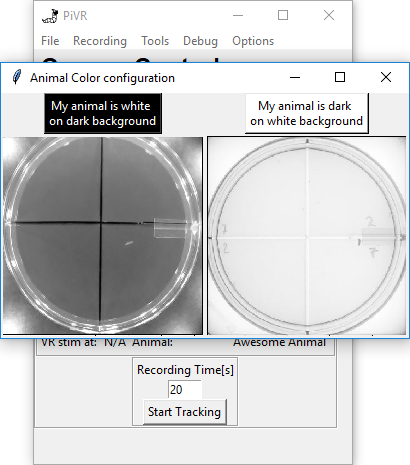

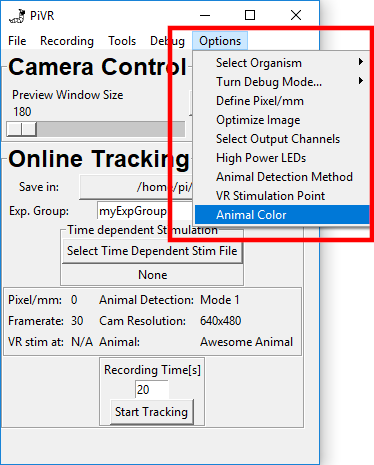

5.15. Animal Color Selection

Depending on your experimental setup, the animal can either be dark on white background due to transillumination, or white on dark background due to side illumination.

The standard setting is dark on white. If you need to change this setting, go to Options->Animal Color.

Now just press the button above the image that describes your experiment.